AWS Graviton Technical Guide

This repository provides technical guidance for users and developers using Amazon EC2 instances powered by AWS Graviton processors (including the latest generation Graviton4 processors). While it calls out specific features of the Graviton processors themselves, this repository is also generally useful for anyone running code on Arm-based systems.

Contents

- Transitioning to Graviton

- Building for Graviton

- Optimizing for Graviton

- Taking advantage of Arm Advanced SIMD instructions

- Recent software updates relevant to Graviton

- Language-specific considerations

- Containers on Graviton

- Headless website testing with Chrome and Puppeteer on Graviton

- Lambda on Graviton

- Operating Systems support

- Third-party Software Vendors

- Finding and managing AMIs for Graviton, with AWS SystemManager or CloudFormation

- DPDK, SPDK, and other datapath software

- PyTorch

- llama.cpp

- R

- TensorFlow

- Spark on Graviton

- Known issues and workarounds

- AWS Managed Services available on Graviton

- Graviton Performance Runbook

- Assembly Optimization Guide for Graviton Arm64 Processors

- Additional resources

- How To Resources

- Blog Posts

- Case Studies

Transitioning to Graviton

If you are new to Graviton and want to understand how to identify target workloads, how to plan a transition project, how to test your workloads on AWS Graviton and finally how deploy in production, please read the key considerations to take into account when transitioning workloads to AWS Graviton based Amazon EC2 instances.

Building for Graviton

| Processor | Graviton2 | Graviton3(E) | Graviton4 |

|---|---|---|---|

| Instances | M6g/M6gd, C6g/C6gd/C6gn, R6g/R6gd, T4g, X2gd, G5g, and I4g/Im4gn/Is4gen | C7g/C7gd/C7gn, M7g/M7gd, R7g/R7gd, and Hpc7g | C8g, M8g, R8g, X8g, and I8g |

| Core | Neoverse-N1 | Neoverse-V1 | Neoverse-V2 |

| Frequency | 2500MHz | 2600MHz | 2800MHz (2700MHz for 48xlarge) |

| Turbo supported | No | No | No |

| Software Optimization Guide (Instruction Throughput and Latency) | SWOG | SWOG | SWOG |

| Interconnect | CMN-600 | CMN-650 | CMN-700 |

| Architecture revision | ARMv8.2-a | ARMv8.4-a | Armv9.0-a |

| 32bit Backward Compatibility | Userspace only | Userspace only | No support |

| Additional features | fp16, rcpc, dotprod, crypto | sve, rng, bf16, int8 | sve2, sve-int8, sve-bf16, sve-bitperm, sve-crypto |

Recommended -mcpu flag (more information) | neoverse-n1 | neoverse-512tvb | neoverse-512tvb |

| RNG Instructions | No | Yes | Yes |

| SIMD instructions | 2x Neon 128bit vectors | 4x Neon 128bit vectors / 2x SVE 256bit | 4x Neon/SVE 128bit vectors |

| LSE (atomic mem operations) | yes | yes | yes |

| Pointer Authentication | no | yes | yes |

| Branch Target Identification | no | no | yes |

| Cores | 64 | 64 | 96 per socket (192 for 2-socket 48xlarge) |

| L1 cache (per core) | 64kB inst / 64kB data | 64kB inst / 64kB data | 64kB inst / 64kB data |

| L2 cache (per core) | 1MB | 1MB | 2MB |

| LLC (shared) | 32MB | 32MB | 36MB |

| Memory (NUMA) nodes | 1 | 1 | 1 (2 for 48xlarge) |

| DRAM | 8x DDR4 | 8x DDR5 | 12x DDR5 (24x for 48xlarge) |

| DDR Encryption | yes | yes | yes |

Optimizing for Graviton

Please refer to optimizing for general debugging and profiling information. For detailed checklists on optimizing and debugging performance on Graviton, see our performance runbook.

Different architectures and systems have differing capabilities, which means some tools you might be familiar with on one architecture don't have equivalent on AWS Graviton. Documented Monitoring Tools with some of these utilities.

Furthermore, different generations of Graviton support different features, as noted in the table above. For example, Graviton3 supports SVE but Graviton2 does not. Graviton4 supports SVE2 and SVE. For some applications it may be advantageous to implement performance critical kernels to make use of the highest performing feature set available which may not be known until runtime. For this, the best practice is to consult HWCAPS. For details on how to do this, see our guide on HWCAPS runtime feature detection.

Recent software updates relevant to Graviton

There is a huge amount of activity in the Arm software ecosystem and improvements are being made on a daily basis. As a general rule later versions of compilers, language runtimes, and applications should be used whenever possible. The table below includes known recent changes to popular packages that improve performance (if you know of others please let us know).

| Package | Version | Improvements |

|---|---|---|

| bazel | 3.4.1+ | Pre-built bazel binary for Graviton/Arm64. See below for installation. |

| Cassandra | 4.0+ | Supports running on Java/Corretto 11, improving overall performance |

| FFmpeg | 6.0+ | Improved performance of libswscale by 50% with better NEON vectorization which improves the performance and scalability of FFmpeg multi-threaded encoders. The changes are available in FFmpeg version 4.3, with further improvements to scaling and motion estimation available in 5.1. Additional improvements to both are available in 6. For encoding h.265, build with the master branch of x265 because the released version of 3.5 does not include important optimizations for Graviton. For more information about FFmpeg on Graviton, read the blog post on AWS Open Source Blog, Optimized Video Encoding with FFmpeg on AWS Graviton Processors. |

| HAProxy | 2.4+ | A serious bug was fixed. Additionally, building with CPU=armv81 improves HAProxy performance by 4x so please rebuild your code with this flag. |

| MariaDB | 10.4.14+ | Default build now uses -moutline-atomics, general correctness bugs for Graviton fixed. |

| mongodb | 4.2.15+ / 4.4.7+ / 5.0.0+ | Improved performance on graviton, especially for internal JS engine. LSE support added in SERVER-56347. |

| MySQL | 8.0.23+ | Improved spinlock behavior, compiled with -moutline-atomics if compiler supports it. |

| PostgreSQL | 15+ | General scalability improvements plus additional improvements to spin-locks specifically for Arm64 |

| .NET | 5+ | .NET 5 significantly improved performance for ARM64. Here's an associated AWS Blog with some performance results. |

| OpenH264 | 2.1.1+ | Pre-built Cisco OpenH264 binary for Graviton/Arm64. |

| PCRE2 | 10.34+ | Added NEON vectorization to PCRE's JIT to match first and pairs of characters. This may improve performance of matching by up to 8x. This fixed version of the library now is shipping with Ubuntu 20.04 and PHP 8. |

| PHP | 7.4+ | PHP 7.4 includes a number of performance improvements that increase perf by up to 30% |

| pip | 19.3+ | Enable installation of python wheel binaries on Graviton |

| PyTorch | 2.0+ | Optimize Inference latency and throughput on Graviton. AWS DLCs and python wheels are available. |

| ruby | 3.0+ | Enable arm64 optimizations that improve performance by as much as 40%. These changes have also been back-ported to the Ruby shipping with AmazonLinux2, Fedora, and Ubuntu 20.04. |

| Spark | 3.0+ | Supports running on Java/Corretto 11, improving overall performance. |

| zlib | 1.2.8+ | For the best performance on Graviton please use zlib-cloudflare. |

Containers on Graviton

You can run Docker, Kubernetes, Amazon ECS, and Amazon EKS on Graviton. Amazon ECR supports multi-arch containers. Please refer to containers for information about running container-based workloads on Graviton.

Lambda on Graviton

AWS Lambda now allows you to configure new and existing functions to run on Arm-based AWS Graviton2 processors in addition to x86-based functions. Using this processor architecture option allows you to get up to 34% better price performance. Duration charges are 20 percent lower than the current pricing for x86 with millisecond granularity. This also applies to duration charges when using Provisioned Concurrency. Compute Savings Plans supports Lambda functions powered by Graviton2.

The Lambda page highlights some of the migration considerations and also provides some simple to deploy demos you can use to explore how to build and migrate to Lambda functions using Arm/Graviton2.

Operating Systems

Please check os.md for more information about which operating system to run on Graviton based instances.

Known issues and workarounds

Postgres

Postgres performance can be heavily impacted by not using LSE.

Today, postgres binaries from distributions (e.g. Ubuntu) are not built with -moutline-atomics or -march=armv8.2-a which would enable LSE. Note: Amazon RDS for PostgreSQL isn't impacted by this.

In November 2021 PostgreSQL started to distribute Ubuntu 20.04 packages optimized with -moutline-atomics.

For Ubuntu 20.04, we recommend using the PostgreSQL PPA instead of the packages distributed by Ubuntu Focal.

Please follow the instructions to set up the PostgreSQL PPA.

Python installation on some Linux distros

The default installation of pip on some Linux distributions is old (<19.3) to install binary wheel packages released for Graviton. To work around this, it is recommended to upgrade your pip installation using:

sudo python3 -m pip install --upgrade pip

Bazel on Linux

The Bazel build tool now releases a pre-built binary for arm64. As of October 2020, this is not available in their custom Debian repo, and Bazel does not officially provide an RPM. Instead, we recommend using the Bazelisk installer, which will replace your bazel command and keep bazel up to date.

Below is an example using the latest Arm binary release of Bazelisk as of October 2020:

wget https://github.com/bazelbuild/bazelisk/releases/download/v1.7.1/bazelisk-linux-arm64

chmod +x bazelisk-linux-arm64

sudo mv bazelisk-linux-arm64 /usr/local/bin/bazel

bazel

Bazelisk itself should not require further updates, as its only purpose is to keep Bazel updated.

zlib on Linux

Linux distributions, in general, use the original zlib without any optimizations. zlib-cloudflare has been updated to provide better and faster compression on Arm and x86. To use zlib-cloudflare:

git clone https://github.com/cloudflare/zlib.git

cd zlib

./configure --prefix=$HOME

make

make install

Make sure to have the full path to your lib at $HOME/lib in /etc/ld.so.conf and run ldconfig.

For JDKs that dynamically link to the system zlib, you can set LD_LIBRARY_PATH to point to the directory where your newly built version of zlib-cloudflare is located or load that library with LD_PRELOAD.

You can check the libz that JDK is dynamically linked against with:

$ ldd /Java/jdk-11.0.8/lib/libzip.so | grep libz

libz.so.1 => /lib/x86_64-linux-gnu/libz.so.1 (0x00007ffff7783000)

NOTE: Linux versions of OpenJDK and Amazon Corretto 17+ dynamically link to zlib. Earlier versions of Corretto bundled zlib directly and this behavior can vary by JDK vendor and platform.

Blog Posts

HPC

- Application deep-dive into the AWS Graviton3E-based Amazon EC2 Hpc7g instance

- Rescale Automates the Deployment of Ansys LS-DYNA and Ansys Fluent Workloads on Amazon EC2 Hpc7g Instances

- Lattice Boltzmann simulation with Palabos on AWS using Graviton-based Amazon EC2 Hpc7g instances

- Instance sizes in the Amazon EC2 Hpc7 family – a different experience

- Checkpointing HPC applications using the Spot Instance two-minute notification from Amazon EC2

- Best practices for running molecular dynamics simulations on AWS Graviton3E

Machine Learning

- Optimized PyTorch 2.0 inference with AWS Graviton processors

- Reduce Amazon SageMaker inference cost with AWS Graviton

- PyTorch blog: Optimized PyTorch 2.0 Inference with AWS Graviton processors

- PyTorch Inference Performance Tuning on AWS Graviton Processors

- PyTorch blog: Accelerated PyTorch inference with torch.compile on AWS Graviton processors

- Sprinklr improves performance by 20% and reduces cost by 25% for machine learning inference on AWS Graviton3

- Run machine learning inference workloads on AWS Graviton-based instances with Amazon SageMaker

- Accelerate NLP inference with ONNX Runtime on AWS Graviton processors

- Best-in-class LLM Performance on Arm Neoverse V1 based AWS Graviton3 CPUs

- Accelerating Popular Hugging Face Models using Arm Neoverse

- Run LLMs on CPU with Amazon SageMaker Real-time Inference

- Accelerating large-scale neural network training on CPUs with ThirdAI and AWS Graviton

- Enhancing LLM Serving with Torch Compiled RAG on AWS Graviton

- Intro to Llama on Graviton

- Small Language Models (SLMs) inference with llama.cpp on Graviton4

- Run DeepSeek R1 LLM Inference on AWS Graviton

- DeepSeek-R1 Distill Model on CPU with AWS Graviton4 for batch inference

Other

- Optimized Video Encoding with FFmpeg on AWS Graviton Processors

- Video Encoding on Graviton in 2025

- Using Amazon APerf to go from 50% below to 36% above performance target

Case Studies

HPC

- Encored Technologies Successfully Built an HPC on AWS for Weather Research & Forecasting (WRF)

- Arm Scales Performance for Chip Design Using Amazon FSx for NetApp ONTAP

- The Institut Pasteur and AWS are analysing the world's DNA, using a public database

Other

Additional resources

- AWS Graviton

- Neoverse N1 Software Optimization Guide

- Armv8 reference manual

- Package repository search tool

Feedback? ec2-arm-dev-feedback@amazon.com

Considerations when transitioning workloads to AWS Graviton based Amazon EC2 instances

AWS Graviton processors power Amazon EC2 general purpose (M8g, M7g, M7gd, M6g, M6gd, T4g), compute optimized (C8g, C7g, C7gd, C7gn, C6g, C6gd, C6gn), memory optimized (X8g, R8g, R7g, R7gd, R6g, R6gd) instances, storage optimized (I8g, I4g, Im4gn, Is4gen), HPC optimized (Hpc7g), and GPU-powered (G5g) instances that provide the best price-performance for a wide variety of Linux-based workloads. Examples include application servers, micro-services, high-performance computing, CPU-based machine learning inference, video encoding, electronic design automation, gaming, open-source databases, and in-memory caches. In most cases transitioning to AWS Graviton is as simple as updating your infrastructure-as-code to select the new instance type and associated Operating System (OS) Amazon Machine Image (AMI). However, because AWS Graviton processors implement the arm64 instruction set, there can be additional software implications. This transition guide provides a step-by-step approach to assess your workload to identify and address any potential software changes that might be needed.

Introduction - identifying target workloads

The quickest and easiest workloads to transition are Linux-based, and built using open-source components or in-house applications where you control the source code. Many open source projects already support Arm64 and by extension Graviton, and having access to the source code allows you to build from source if pre-built artifacts do not already exist. There is also a large and growing set of Independent Software Vendor (ISV) software available for Graviton (a non-exhaustive list can be found here. However if you license software you’ll want to check with the respective ISV to ensure they already, or have plans to, support the Arm64 instruction set.

Another set of opportunities, not covered in this guide, are all the managed services offered by AWS which leverage AWS Graviton processors. Those services, listed on AWS Graviton-based Manage Services page, tend to be straightforward to adopt and require a process similar to moving to a new version of underlying instance of the same architecture, or in some cases do not require any modification.

In this guide, we will focus on applications you operate on Amazon EC2 instances, either directly or in the context of orchestration engine operating on top of Amazon EC2 instances, such as Amazon ECS or Amazon EKS.

The following transition guide is organized into a logical sequence of steps as follows:

- Learning and exploring

- Step 1 - [Optional] Understand the Graviton Processor and review key documentation

- Step 2 - Explore your workload, and inventory your current software stack

- Plan your workload transition

- Step 3 - Install and configure your application environment

- Step 4 - [Optional] Build your application(s) and/or container images

- Test and optimize your workload

- Step 5 - Testing and optimizing your workload

- Step 6 - Performance testing

- Infrastructure and deployment

- Step 7 - Update your infrastructure as code

- Step 8 - Perform canary or Blue-Green deployment

Learning and exploring

Step 1 - [Optional] Understand the Graviton Processor and review key documentation

- [Optional] Start by watching re:Invent 2024 - AWS Graviton: The best price performance for your AWS workloads, re:Invent 2024 - Dive deep into the AWS Nitro System and re:Invent 2021 - Deep dive into AWS Graviton3 and Amazon EC2 C7g instances, which will give you an overview of the Graviton-based instances and some insights on how to run applications depending on their operating system, languages and runtimes.

- [Optional] Keep on learning by watching AWS Summit SF 2022 - The journey of silicon innovation at AWS to better understand Amazon long-term commitment to innovate with custom silicon.

- Get familiar with the rest of this Getting started with AWS Graviton repository which will act as a useful reference throughout your workload transition.

Step 2 - Explore your workload, and inventory your current software stack

Before starting the transition, you will need to inventory your current software stack so you can identify the path to equivalent software versions that support Graviton. At this stage it can be useful to think in terms of software you download (e.g. open source packages, container images, libraries), software you build and software you procure/license (e.g. monitoring or security agents). Areas to review:

- Operating system, pay attention to specific versions that support Graviton (usually more recent are better)

- If your workload is container based, check container images you consume for Arm64 support. Keep in mind many container images now support multiple architectures which simplifies consumption of those images in a mixed-architecture environment. See the ECR multi-arch support announcement for more details on multi-arch images.

- All the libraries, frameworks and runtimes used by the application.

- Tools used to build, deploy and test your application (e.g. compilers, test suites, CI/CD pipelines, provisioning tools and scripts). Note there are language specific sections in the getting started guide with useful pointers to getting the best performance from Graviton processors.

- Tools and/or agents used to deploy and manage the application in production (e.g. monitoring tools or security agents)

- Amazon Transform helps quickly and easily migrate software environments that provide a path to Graviton adoption - for example:

- This guide contains language specifics sections where you'll find additional per-language guidance:

As a rule the more current your software environment the more likely you will obtain the full performance entitlement from Graviton.

For each component of your software stack, check for arm64/Graviton support. A large portion of this can be done using existing configuration scripts, as your scripts run and install packages you will get messages for any missing components, some may build from source automatically while others will cause the script to fail. Pay attention to software versions as in general the more current your software is the easier the transition, and the more likely you’ll achieve the full performance entitlement from Graviton processors. If you do need to perform upgrades prior to adopting Graviton then it is best to do that using an existing x86 environment to minimize the number of changed variables. We have seen examples where upgrading OS version on x86 was far more involved and time consuming than transitioning to Graviton after the upgrade. For more details on checking for software support please see Appendix A.

Note: When locating software be aware that some tools, including GCC, refer to the architecture as AArch64, others including the Linux Kernel, call it arm64. When checking packages across various repositories, you’ll find those different naming conventions.

Plan your workload transition

Step 3- Install and configure your application environment

To transition and test your application, you will need a suitable Graviton environment. Depending on your execution environment, you may need to:

- Obtain or create an arm64 AMI to boot your Graviton instance(s) from. Depending on how you manage your AMIs, you can either start directly from an existing reference AMI for Arm64, or you can build a Golden AMI with your specific dependencies from one of the reference images (see here for a full list of supported operating systems with AMI links) ;

- If you operate a container based environment, you’ll need to build or extend an existing cluster with support for Graviton based instances. Both Amazon ECS and EKS support adding Graviton-based instances to an existing x86-based cluster. For ECS, you can add Graviton-based instances to your ECS cluster, launching them with either the AWS ECS-optimized AMI for arm64 or your own AMI after you’ve installed the ECS agent. For EKS, you will need to create a node-group with Graviton-based instances launched with the EKS optimized AMI for arm64.

- Note: you can support Graviton and x86 instances in the same Auto Scaling Group, this blog details the process using the launch template override feature.

- Complete the installation of your software stack based on the inventory created in step 2.

- Note: In many cases your installation scripts can be used as-is or with minor modifications to reference architecture specific versions of components where necessary. The first time through this may be an iterative process as you resolve any remaining dependencies.

Step 4 - [Optional] Build your application(s) and/or container images

Note: If you are not building your application or component parts of your overall application stack you may skip this step.

For applications built using interpreted or JIT’d languages, including Java, PHP or Node.js, they should run as-is or with only minor modifications. The repository contains language specific sections with recommendations, for example Java, Python, C/C++, Golang, PHP, R, Node.js, Rust or .Net. Note: if there is no language specific section, it is because there is no specific guidance beyond using a suitably current version of the language as documented here. .NET-core is a great way to benefit from Graviton-based instances, this blog post covers .NET 8 performance.

Applications using compiled languages including C, C++ or Go, need to be compiled for the Arm64 architecture. Most modern builds (e.g. using Make) will just work when run natively on Graviton-based instances, however, you’ll find language specific compiler recommendations in this repository: C/C++, Go, and Rust.

Just like an operating system, container images are architecture specific. You will need to build arm64 container image(s), to make the transition easier we recommend building multi-arch container image(s) that can run automatically on either x86-64 or arm64. Check out the container section of this repository for more details and this blog post provides a detailed overview of multi-architecture container image support, which is considered a best practice for establishing and maintaining a multi-architecture environment.

You will also need to review any functional and unit test suite(s) to ensure you can test the new build artifacts with the same test coverage you have already for x86 artifacts.

Test and optimize your workload

Step 5 - Testing and optimizing your workload

Now that you have your application stack on Graviton, you should run your test suite to ensure all regular unit and functional tests pass. Resolve any test failures in the application(s) or test suites until you are satisfied everything is working as expected. Most errors should be related to the modifications and updated software versions you have installed during the transition (tip: when upgrading software versions first test them using an existing x86 environment to minimize the number of variables changed at a time. If issues occur then resolve them using the current x86 environment before continuing with the new Graviton environment). If you suspect architecture specific issue(s) please have a look to our C/C++ section which documents them and give advice on how to solve them. If there are still details that seem unclear, please reach out to your AWS account team, or to the AWS support for assistance.

Step 6 - Performance testing

With your fully functional application, it is time to establish a performance baseline on Graviton. In many cases, Graviton will provide performance and/or capacity improvements over x86-based instances.

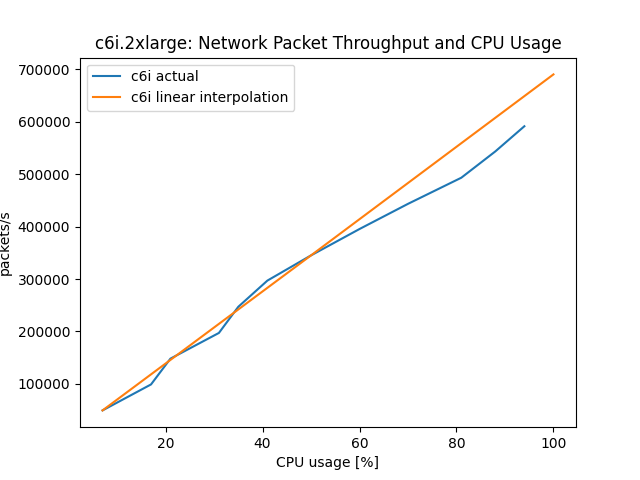

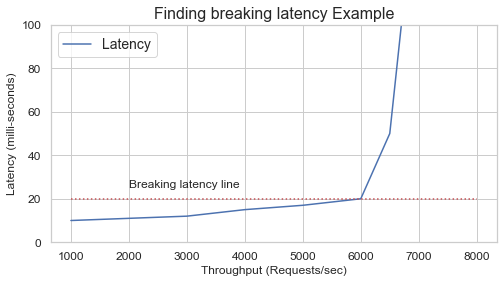

One of the major differences between AWS Graviton instances and other Amazon EC2 instances is their vCPU-to-physical-core mappings. Every vCPU on a Graviton processor maps to a physical core, and there is no Simultaneous Multi-Threading (SMT). Consequently, Graviton provides better linear performance scalability in most cases. When comparing to existing x86 instances, we recommend fully loading both instance types to determine the maximum sustainable load before the latency or error rate exceeds acceptable bounds. For horizontally-scalable, multi-threaded workloads that are CPU bound, you may find that the Graviton instances are able to sustain a significantly higher transaction rate before unacceptable latencies or error rates occur.

During the transition to Graviton, if you are using Amazon EC2 Auto Scaling, you may be able to increase the threshold values for the CloudWatch alarms that invoke the scaling process. This may reduce the number of EC2 instances now needed to serve a given level of demand.

Important: This repository has sections dedicated to Optimization and a Performance Runbook for you to follow during this stage.

If after reading the documentation in this repository and following the recommendations you do not observe expected performance then please reach out to your AWS account team, or send email to ec2-arm-dev-feedback@amazon.com with details so we can assist you with your performance observations.

Infrastructure and deployment

Step 7 - Update your infrastructure as code

Now you have a tested and performant application, its time to update your infrastructure as code to add support for Graviton-based instances. This typically includes updating instance types, AMI IDs, ASG constructs to support multi-architecture (see Amazon EC2 ASG support for multiple Launch Templates), and finally deploying or redeploying your infrastructure.

Step 8 - Perform canary or Blue-Green deployment

Once your infrastructure is ready to support Graviton-based instances, you can start a Canary or Blue-Green deployment to re-direct a portion of application traffic to the Graviton-based instances. Ideally initial tests will run in a development environment to load test with production traffic patterns. Monitor the application closely to ensure expected behavior. Once your application is running as expected on Graviton you can define and execute your transition strategy and begin to enjoy the benefits of increased price-performance.

Appendix A - locating packages for Arm64/Graviton

Remember: When locating software be aware that some tools, including GCC, refer to the architecture as AArch64, others including the Linux Kernel, call it arm64. When checking packages across various repositories, you’ll find those different naming conventions, and in some cases just “ARM”.

The main ways to check and places to look for will be:

- Package repositories of your chosen Linux distribution(s). Arm64 support within Linux distributions is largely complete: for example, Debian, which has the largest package repository, has over 98% of its packages built for the arm64 architecture.

- Container image registry. Amazon ECR now offers public repositories that you can search for arm64 images. DockerHub allows you to search for a specific architecture (e.g. arm64).

- Note: Specific to containers you may find an amd64 (x86-64) container image you currently use transitioned to a multi-architecture container image when adding Arm64 support. This means you may not find an explicit arm64 container, so be sure to check for both as projects may chose to vend discrete images for x86-64 and arm64 while other projects chose to vend a multi-arch image supporting both architectures.

- On GitHub, you can check for arm64 versions in the release section. However, some projects don’t use the release section, or only release source archives, so you may need to visit the main project webpage and check the download section. You can also search the GitHub project for “arm64” or “AArch64” to see whether the project has any arm64 code contributions or issues. Even if a project does not currently produce builds for arm64, in many cases an Arm64 version of those packages will be available through Linux distributions or additional package repositories. You can search for packages using a package search tool such as pkgs.org.

- The download section or platform support matrix of your software vendors, look for references to arm64, AArch64 or Graviton.

Categories of software with potential issues:

- Packages or applications sourced from an ISV may not yet be available for Graviton. AWS is working with lots of software partners to offer technical guidance as they add support for Graviton, but some are still missing or in the process of adding support. A non-exhaustive list of some ISV software can be found in here.

- The Python community vend lots of modules built using low level languages (e.g. C/C++) that need to be compiled for the Arm64 architecture. You may use modules that are not currently available as pre-built binaries from the Python Package Index. AWS is actively working with open-source communities to ensure the most popular modules are available. In the meantime we provide specific instructions to resolve the build-time dependencies for missing packages in the Python section of the Graviton Getting Started Guide.

If you find other software lacking support for Arm64, please let your AWS team know, or send email to ec2-arm-dev-feedback@amazon.com.

Optimizing for Graviton

Debugging Problems

It's possible that incorrect code will work fine on an existing system, but produce an incorrect result when using a new compiler. This could be because it relies on undefined behavior in the language (e.g. assuming char is signed in C/C++, or the behavior of signed integer overflow), contains memory management bugs that happen to be exposed by aggressive compiler optimizations, or incorrect ordering. Below are some techniques / tools we have used to find issues while migrating our internal services to newer compilers and Graviton based instances.

Using Sanitizers

The compiler may generate code and layout data slightly differently on Graviton compared to an x86 system and this could expose latent memory bugs that were previously hidden. On GCC, the easiest way to look for these bugs is to compile with the memory sanitizers by adding the below to standard compiler flags:

CFLAGS += -fsanitize=address -fsanitize=undefined

LDFLAGS += -fsanitize=address -fsanitize=undefined

Then run the resulting binary, any bugs detected by the sanitizers will cause the program to exit immediately and print helpful stack traces and other information.

Ordering issues

Arm is weakly ordered, similar to POWER and other modern architectures. While x86 is a variant of total-store-ordering (TSO). Code that relies on TSO may lack barriers to properly order memory references. Armv8 based systems, including all Gravitons are weakly ordered multi-copy-atomic.

While TSO allows reads to occur out-of-order with writes and a processor to observe its own write before it is visible to others, the Armv8 memory model has further relaxations for performance and power efficiency. Code relying on pthread mutexes or locking abstractions found in C++, Java or other languages shouldn't notice any difference. Code that has a bespoke implementation of lockless data structures or implements its own synchronization primitives will have to use the proper intrinsics and barriers to correctly order memory transactions. If you run into an issue with memory ordering please feel free to open an issue in this GitHub repo, and one of our AWS experts will contact you.

Architecture specific optimization

Sometimes code will have architecture specific optimizations. These can take many forms:

sometimes the code is optimized in assembly using specific instructions for

CRC,

other times the code could be enabling a feature

that has been shown to work well on particular architectures. A quick way to see if any optimizations

are missing for Arm is to grep the code for __x86_64__ ifdefs and see if there

is corresponding Arm code there too. If not, that might be something to improve.

We welcome suggestions by opening an issue in this repo.

Lock/Synchronization intensive workload

Graviton2 processors and later support the Arm Large Scale Extensions (LSE). LSE based locking and synchronization

is an order of magnitude faster for highly contended locks with high core counts (e.g. up to 192 cores on Graviton4).

For workloads that have highly contended locks, compiling with -march=armv8.2-a will enable LSE based atomics and can substantially increase performance. However, this will prevent the code

from running on an Arm v8.0 system such as AWS Graviton-based EC2 A1 instances.

With GCC 10 and newer an option -moutline-atomics will not inline atomics and

detect at run time the correct type of atomic to use. This is slightly worse

performing than -march=armv8.2-a but does retain backwards compatibility.

Network intensive workloads

In some workloads, the packet processing capability of Graviton is both faster and

lower-latency than other platforms, which reduces the natural “coalescing”

capability of Linux kernel and increases the interrupt rate.

Depending on the workload it might make sense to enable adaptive RX interrupts

(e.g. ethtool -C <interface> adaptive-rx on).

Profiling the code

If you aren't getting the performance you expect, one of the best ways to understand what is going on in the system is to compare profiles of execution and understand where the CPUs are spending time. This will frequently point to a hot function or sub-system that could be optimized. A crutch is comparing a profile between a system that is performing well and one that isn't to see the relative difference in execution time. Feel free to open an issue in this GitHub repo for advice or help.

Using AWS APerf tool:

# Graviton

wget -qO- https://github.com/aws/aperf/releases/download/v0.1.10-alpha/aperf-v0.1.10-alpha-aarch64.tar.gz | tar -xvz -C /target/directory

# x86

wget -qO- https://github.com/aws/aperf/releases/download/v0.1.10-alpha/aperf-v0.1.10-alpha-x86_64.tar.gz | tar -xvz -C /target/directory

## Record a profile and generate a report

cd /target/directory/

./aperf record -r <RUN_NAME> -i <INTERVAL_NUMBER> -p <COLLECTION_PERIOD>

./aperf report -r <COLLECTOR_DIRECTORY> -n <REPORT_NAME>

## The resulting report can be viewed with a web-browser by opening the index.html file

Using the Linux perf tool:

# Amazon Linux 2

sudo yum install perf

# Ubuntu

sudo apt-get install linux-tools-$(uname -r)

Record a profile:

# If the program is run interactively

$ sudo perf record -g -F99 -o perf.data ./your_program

# If the program is a service, sample all cpus (-a) and run for 60 seconds while the system is loaded

$ sudo perf record -ag -F99 -o perf.data sleep 60

Look at the profile:

$ perf report

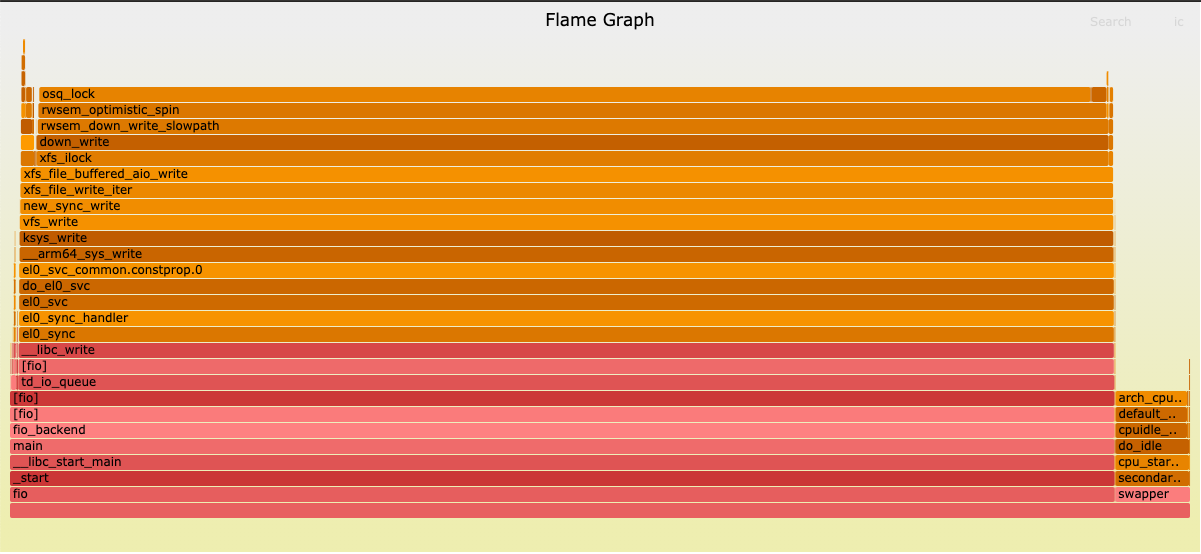

Additionally, there is a tool that will generate a visual representation of the output which can sometimes be more useful:

git clone https://github.com/brendangregg/FlameGraph.git

perf script -i perf.data | FlameGraph/stackcollapse-perf.pl | FlameGraph/flamegraph.pl > flamegraph.svg

For example, in March 2020, we committed a patch to

ffmpeg to

improve performance. Comparing the execution time of a C5 vs an M6g

immediately uncovered an outlier function ff_hscale_8_to_15_neon. Once we

identified this as the outlier we could focus on improving this function.

C5.4XL M6g.4XL

19.89% dv_encode_video_segment 19.57% ff_hscale_8_to_15_neon

11.21% decode_significance_x86 18.02% get_cabac

8.68% get_cabac 15.08% dv_encode_video_segment

8.43% ff_h264_decode_mb_cabac 5.85% ff_jpeg_fdct_islow_8

8.05% ff_hscale8to15_X4_ssse3 5.01% ff_yuv2planeX_8_neon

C/C++ on Graviton

Enabling Arm Architecture Specific Features

TLDR: To target all current generation Graviton instances (Graviton2,

Graviton3, and Graviton4), use -march=armv8.2-a.

C and C++ code can be built for Graviton with a variety of flags, depending on

the goal. If the goal is to get the best performance for a specific generation,

select a flag from the table column "performance". If the goal is to get a good

compromise of feature availability and performance balanced across generations,

select the flag from the "balanced" column. If you want to target multiple

generations of Graviton, select the "balanced" flag for the oldest generation

planned for deployment, since code built for a newer generation may not run on

an older generation. On arm64 -mcpu= acts as both specifying the appropriate

architecture and tuning and it's generally better to use that vs -march if

you're building for a specific CPU.

| CPU | Flag (performance) | Flag (balanced) | GCC version | LLVM version |

|---|---|---|---|---|

| Graviton2 | -mcpu=neoverse-n1 ¹ | -march=armv8.2-a | GCC-9 | Clang/LLVM 10+ |

| Graviton3(E) | -mcpu=neoverse-v1 | -mcpu=neoverse-512tvb ² | GCC 11 | Clang/LLVM 14+ |

| Graviton4 | -mcpu=neoverse-v2 | -mcpu=neoverse-512tvb ² | GCC 13 | Clang/LLVM 16+ |

¹ Requires GCC-9 or later (or GCC-7 for Amazon Linux 2); otherwise we suggest

using -mcpu=cortex-a72

² If your compiler doesn't support neoverse-512tvb, please use the Graviton2

tuning.

For some applications, it may be necessary to support a broad range of Arm64

targets while still making use of more advanced features such as LSE (Large

System Extensions) or SVE (Scalable Vector Extension). For this case choose a

more conservative build flag, such as -march=armv8-a and make use of runtime

CPU support detection of features such as SVE. You can enable runtime detection

and use of LSE atomics instructions by adding the additional compiler flag,

-moutline-atomics.

Compilers

Newer compilers provide better support and optimizations for Graviton processors. We have seen 15% better performance on Graviton2 when using gcc-10 instead of Amazon Linux 2 system's compiler gcc-7. When possible please use the latest compiler version available on your system. The table shows GCC and LLVM compiler versions available in Linux distributions. Starred version marks the default system compiler.

| Distribution | GCC | Clang/LLVM |

|---|---|---|

| Amazon Linux 2023 | 11* | 15* |

| Amazon Linux 2 | 7*, 10 | 7, 11* |

| Ubuntu 24.04 | 9, 10, 11, 12, 13*, 14 | 14, 15, 16, 17, 18* |

| Ubuntu 22.04 | 9, 10, 11*, 12 | 11, 12, 13, 14* |

| Ubuntu 20.04 | 7, 8, 9*, 10 | 6, 7, 8, 9, 10, 11, 12 |

| Ubuntu 18.04 | 4.8, 5, 6, 7*, 8 | 3.9, 4, 5, 6, 7, 8, 9, 10 |

| Debian10 | 7, 8* | 6, 7, 8 |

| Red Hat EL8 | 8*, 9, 10 | 10 |

| SUSE Linux ES15 | 7*, 9, 10 | 7 |

Large-System Extensions (LSE)

All Graviton processors after Graviton1 have support for the Large-System Extensions (LSE) which was first introduced in vArmv8.1. LSE provides low-cost atomic operations which can improve system throughput for CPU-to-CPU communication, locks, and mutexes. The improvement can be up to an order of magnitude when using LSE instead of load/store exclusives.

POSIX threads library needs LSE atomic instructions. LSE is important for locking and thread synchronization routines. The following systems distribute a libc compiled with LSE instructions:

- Amazon Linux 2

- Amazon Linux 2023

- Ubuntu 18.04 (needs

apt install libc6-lse) - Ubuntu 20.04

- Ubuntu 22.04

- Ubuntu 24.04

The compiler needs to generate LSE instructions for applications that use atomic

operations. For example, the code of databases like PostgreSQL contain atomic

constructs; c++11 code with std::atomic statements translate into atomic

operations. GCC's -march=armv8.2-a flag enables all instructions supported by

Graviton2, including LSE. To confirm that LSE instructions are created,

the output of objdump command line utility should contain LSE instructions:

$ objdump -d app | grep -i 'cas\|casp\|swp\|ldadd\|stadd\|ldclr\|stclr\|ldeor\|steor\|ldset\|stset\|ldsmax\|stsmax\|ldsmin\|stsmin\|ldumax\|stumax\|ldumin\|stumin' | wc -l

To check whether the application binary contains load and store exclusives:

$ objdump -d app | grep -i 'ldxr\|ldaxr\|stxr\|stlxr' | wc -l

Porting codes with SSE/AVX intrinsics to NEON

When programs contain code with x64 intrinsics, the following procedure can help to quickly obtain a working program on Arm, assess the performance of the program running on Graviton processors, profile hot paths, and improve the quality of code on the hot paths.

To quickly get a prototype running on Arm, one can use

https://github.com/DLTcollab/sse2neon a translator of x64 intrinsics to NEON.

sse2neon provides a quick starting point in porting performance critical codes

to Arm. It shortens the time needed to get an Arm working program that then

can be used to extract profiles and to identify hot paths in the code. A header

file sse2neon.h contains several of the functions provided by standard x64

include files like xmmintrin.h, only implemented with NEON instructions to

produce the exact semantics of the x64 intrinsic. Once a profile is

established, the hot paths can be rewritten directly with NEON intrinsics to

avoid the overhead of the generic sse2neon translation.

Signed vs. Unsigned char

The C standard doesn't specify the signedness of char. On x86 char is signed by

default while on Arm it is unsigned by default. This can be addressed by using

standard int types that explicitly specify the signedness (e.g. uint8_t and int8_t)

or compile with -fsigned-char.

When using the getchar function, instead of the commonly used but incorrect:

char c;

while((c = getchar()) != EOF) {

// Do something with the character c

}

// Assume we have reached the end of file here

you should use an int type and the standard function feof and ferror to

check for the end of file, as follows:

int c;

while ((c = getchar()) != EOF) {

// Do something with the character c

}

// Once we get EOF, we should check if it is actually an EOF or an error

if (feof(stdin)) {

// End of file has been reached

} else if (ferror(stdin)) {

// Handle the error (check errno, etc)

}

Using Arm instructions to speed-up Machine Learning

Graviton2 and later processors been optimized for performance and power efficient machine learning by enabling Arm dot-product instructions commonly used for Machine Learning (quantized) inference workloads, and enabling Half precision floating point - _float16 to double the number of operations per second, reducing the memory footprint compared to single precision floating point (_float32), while still enjoying large dynamic range.

Using SVE

The scalable vector extensions (SVE) require both a new enough tool-chain to auto-vectorize to SVE (GCC 11+, LLVM 14+) and a 4.15+ kernel that supports SVE. One notable exception is that Amazon Linux 2 with a 4.14 kernel doesn't support SVE; please upgrade to a 5.4+ AL2 kernel. Graviton3 and Graviton4 support SVE, earlier Gravitons does not.

Using Arm instructions to speed-up common code sequences

The Arm instruction set includes instructions that can be used to speedup common code sequences. The table below lists common operations and links to code sequences:

| Operation | Description |

|---|---|

| crc | Graviton processors support instructions to accelerate both CRC32 which is used by Ethernet, media and compression and CRC32C (Castagnoli) which is used by filesystems. |

Go on Graviton

Go is a statically typed, compiled programming language originally designed at Google. Go supports arm64 out of the box, and available in all common distributions, with recent changes that improve performance, so make sure to use the latest version of the Go compiler and toolchain.

Noteworthy performance upgrades

Go 1.18 [released 2022/03/14]

The main implementation of the Go compiler, golang/go, has improved performance on Arm by implementing a new way of passing function arguments and results using registers instead of the stack. This change has been available on x86-64 since 1.17, where it brought performance improvements of about 5%. On Arm this change typically gives even higher performance improvements of 10% or more.

To learn more about the use cases benefiting from Go 1.18's performance improvements, check the blog post: Making your Go workloads up to 20% faster with Go 1.18 and AWS Graviton.

Go 1.17 [released 2021/08/16]

The main implementation of the Go compiler, golang/go, has improved performance for the following standard library packages:

- crypto/ed25519 - the package has been rewritten, and all operations are now approximately twice as fast on both arm64 and amd64.

- crypto/elliptic - CurveParams methods now automatically invoke faster and safer dedicated implementations for known curves (P-224, P-256, and P-521) when available. The P521 curve implementation has also been rewritten and is now constant-time and three times faster on amd64 and arm64.

Go 1.16 [released 2021/02/16]

The main implementation of the Go compiler, golang/go, has improved performance on Arm with couple of changes listed below. Building your project with Go 1.16 will give you these improvements:

- ARMv8.1-A Atomics instructions, which dramatically improve mutex fairness and speed on Graviton 2, and modern Arm core with v8.1 and newer instruction set.

- copy performance improvements, especially when the addresses are unaligned.

Recently updated packages

Changes to commonly used packages that improve performance on Arm can make a noticeable difference in some cases. Here is a partial list of packages to be aware of.

| Package | Version | Improvements |

|---|---|---|

| Snappy | as of commit 196ae77 | assembly implementations of the hot path functions were ported from amd64 to arm64 |

Using Go in a Container with CPU Limits

Go automatically assigns a sensible value to GOMAXPROCS based on the number of

CPU cores available. However, using a container with a limitation on how much

CPU is available to that container can lead to problems. For example, using the

CFS scheduler

option in Docker, --cpus=1 can limit the available CPU time to the

equivalent of 1 CPU while still exposing all of the actually available CPUs to

the container. If you use CPU limits in this way, it may make sense to also

manually set GOMAXPROCS to an equivalent value.

Java on Graviton

Java is a general-purpose programming language. Compiled Java code can run on all platforms that support Java, without the need for recompilation. Java applications are typically compiled to bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. Note: there are multiple programming languages such as Kotlin, Scala, and Groovy that compile to byte code and run on top of the JVM, so those also tend to be highly portable across architectures. Wikipedia

Java is well supported and generally performant out-of-the-box on arm64. Amazon Corretto, a no-cost, multiplatform, production-ready distribution of the Open Java Development Kit (OpenJDK) supports Graviton-powered instances. While Java 8 is fully supported on Arm processors, some customers haven't been able to obtain Graviton's full performance benefit until they switched to Java 11.

This page includes specific details about building and tuning Java application on Graviton.

Java Graviton Migration Checklist

This checklist summarizes portions of the Java on Graviton section and can be helpful for getting started. It is not a comprehensive summary, so we also recommend reading through all the content below.

-

Check AMI & Kernel Version support

- Pre-2020 Linux distributions are unlikely to contain the right optimizations.

- Amazon Linux: AL2023 is ideal. AL2 is fine with a recent kernel (i.e. not 4.14). AL2 is EOL in June 2025.

- Ubuntu: Use at least Ubuntu 20.04. More recent versions are even better.

- Red Hat Linux: RHEL9 is ideal. Use at least RHEL8.2 (be aware kernel uses unusual 64KB memory pages).

- For the full list, see Operating Systems available for Graviton based instances.

-

Check JVM Version & Flavor

- Java: The more recent the better. AWS recommends at least JDK11, but ideally JDK17 or newer. JDK8 is the minimum version supporting Arm64 but likely won’t provide the best performance.

- Amazon Corretto (Amazon’s distribution of OpenJDK): Typically provides the best performance and is recommended for Graviton workloads. Corretto 11 and above support LSE extensions, a set of atomic memory operations that improve performance for lock-contended workloads and reduce garbage collection time. Some customers have seen even better performance on Corretto 17 and Corretto 21.

-

Check JARs and shared objects for architecture specific code (compiled other than Java byte code)

- See guidance for manual scanning process.

- Porting Advisor for Graviton can scan/flag them and is useful for Maven-based projects.

- JNI extensions usually exist to implement performance critical functions in a language other than Java. Without an Arm64 version the code may not work or can fall back on a slower pure Java implementation. Check for Arm64 versions or later versions of the package to see if the JNI has been superseded by more performant native Java implementations.

- Follow these instructions for building multi-arch JAR’s that support both x86 and Arm64/Graviton.

-

Java Crypto operations:

- Review Java JVM options for Crypto optimizations and recommendations.

- AES/GCM benefits when using AES hardware instructions, which can improve performance by up to 5x for this algorithm. Corretto & OpenJDK 18 support this by default and have been back-ported to Corretto & OpenJDK 11 and 17 which can be enabled using

XX:+UnlockDiagnosticVMOptions -XX:+UseAESCTRIntrinsics. - Amazon-corretto-crypto-provider is another option that offers optimizations for a large number of cryptographic operations.

-

Application Testing & Performance Evaluation

- Be sure to run Graviton instances “hotter”: vCPUs are mapped to physical cores instead of Hyperthreads and performance often flatlines at a much higher CPU utilization than with x86 based instances. Testing at low levels of load can lead to misleading results. The most realistic test results are usually achieved when testing close to breaking latency.

- See the Graviton Performance Runbook for more info.

- Aperf is a CLI tool for gathering & visualizing performance data that can be helpful.

Java versions

JDK binaries for arm64 are available from a number of different sources. Amazon Corretto is continuing to improve performance of Java workloads running on Graviton processors and if you have the choice of a JDK to use we recommend using Corretto as it provides the fastest way to get access to the performance improvements AWS is making.

Versions of Corretto released since October 2020 are built to use the most optimal atomic operations within the JVM: Corretto11 (all variants); Correto8 (on Amazon Linux 2 only). This has shown to reduce GC time in some workloads, and avoids contention in net-intensive workloads like Apache Kafka.

Versions of Corretto11 (>=11.0.12) come with additional enhancements to improve

performance on workloads with light to moderate lock-contention: improved spin-lock behavior inside the JVM,

enhanced implementation of Thread.onSpinWait() on Graviton.

Java JVM Options

There are numerous options that control the JVM and may lead to better performance.

-

Flags

-XX:-TieredCompilation -XX:ReservedCodeCacheSize=64M -XX:InitialCodeCacheSize=64Mhave shown large (1.5x) improvements in some Java workloads. Corretto 17 needs two additional flags:-XX:CICompilerCount=2 -XX:CompilationMode=high-only.ReservedCodeCacheSize/InitialCodeCacheSizeshould be equal and can be in range: 64M...127M. The JIT compiler stores generated code in the code cache. The flags change the size of the code cache from the default 240M to the smaller one. The smaller code cache may help CPU to improve the caching and prediction of jitted code. The flags disable the tiered compilation to make the JIT compiler able to use the smaller code cache. These are helpful on some workloads but can hurt on others so testing with and without them is essential. -

Crypto algorithm AES/GCM used by TLS has been optimized for Graviton. On Graviton2 GCM encrypt/decrypt performance improves by 3.5x to 5x. The optimization is enabled by default in Corretto and OpenJDK 18 and later. The optimization has been backported to Corretto and OpenJDK 11 and 17 and can be enabled with the flags

-XX:+UnlockDiagnosticVMOptions -XX:+UseAESCTRIntrinsics. As an alternative, you can use Amazon Corretto Crypto Provider JNI libraries.

Java Stack Size

The default stack size for Java threads (i.e. ThreadStackSize) is 2mb on aarch64 (compared to 1mb on x86_64). You can check the default with:

$ java -XX:+PrintFlagsFinal -version | grep ThreadStackSize

intx CompilerThreadStackSize = 2048 {pd product} {default}

intx ThreadStackSize = 2048 {pd product} {default}

intx VMThreadStackSize = 2048 {pd product} {default}

The default can be easily changed on the command line with either -XX:ThreadStackSize=<kbytes> or -Xss<bytes>. Notice that -XX:ThreadStackSize interprets its argument as kilobytes whereas -Xss interprets it as bytes. So -XX:ThreadStackSize=1024 and -Xss1m will both set the stack size for Java threads to 1 megabyte:

$ java -Xss1m -XX:+PrintFlagsFinal -version | grep ThreadStackSize

intx CompilerThreadStackSize = 2048 {pd product} {default}

intx ThreadStackSize = 1024 {pd product} {command line}

intx VMThreadStackSize = 2048 {pd product} {default}

Usually, there's no need to change the default, because the thread stack will be committed lazily as it grows. So no matter what's the default, the thread will always only commit as much stack as it really uses (at page size granularity). However there's one exception to this rule if Transparent Huge Pages (THP) are turned on by default on a system. In such a case the THP page size of 2mb matches exactly with the 2mb default stack size on aarch64 and most stacks will be backed up by a single huge page of 2mb. This means that the stack will be completely committed to memory right from the start. If you're using hundreds or even thousands of threads, this memory overhead can be considerable.

To mitigate this issue, you can either manually change the stack size on the command line (as described above) or you can change the default for THP from always to madvise on Linux distributions like AL2 (with Linux kernel 5 and higher) on which the setting defaults to always:

# cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

# echo madvise > /sys/kernel/mm/transparent_hugepage/enabled

# cat /sys/kernel/mm/transparent_hugepage/enabled

always [madvise] never

Notice that even if the the default is changed from always to madvise, the JVM can still use THP for the Java heap and code cache if you specify -XX:+UseTransparentHugePages on the command line.

Looking for x86 shared-objects in JARs

Java JARs can include shared-objects that are architecture specific. Some Java libraries check if these shared objects are found and if they are they use a JNI to call to the native library instead of relying on a generic Java implementation of the function. While the code might work, without the JNI the performance can suffer.

A quick way to check if a JAR contains such shared objects is to simply unzip it and check if any of the resulting files are shared-objects and if an aarch64 (arm64) shared-object is missing:

$ unzip foo.jar

$ find . -name "*.so" -exec file {} \;

For each x86-64 ELF file, check there is a corresponding aarch64 ELF file in the binaries. With some common packages (e.g. commons-crypto) we've seen that even though a JAR can be built supporting Arm manually, artifact repositories such as Maven don't have updated versions. To see if a certain artifact version may have Arm support, consult our Common JARs with native code Table. Feel free to open an issue in this GitHub repo or contact us at ec2-arm-dev-feedback@amazon.com for advice on getting Arm support for a required Jar.

Building multi-arch JARs

Java is meant to be a write once, and run anywhere language. When building Java artifacts that contain native code, it is important to build those libraries for each major architecture to provide a seamless and optimally performing experience for all consumers. Code that runs well on both Graviton and x86 based instances increases the package's utility.

There is nominally a multi-step process to build the native shared objects for each supported architecture before doing the final packaging with Maven, SBT, Gradle etc. Below is an example of how to create your JAR using Maven that contains shared libraries for multiple distributions and architectures for running your Java application interchangeably on AWS EC2 instances based on x86 and Graviton processors:

# Create two build instances, one x86 and one Graviton instance.

# Pick one instance to be the primary instance.

# Log into the secondary instance

$ cd java-lib

$ mvn package

$ find target/ -name "*.so" -type f -print

# Note the directory this so file is in, it will be in a directory

# such as: target/classes/org/your/class/hierarchy/native/OS/ARCH/lib.so

# Log into the primary build instance

$ cd java-lib

$ mvn package

# Repeat the below two steps for each OS and ARCH combination you want to release

$ mkdir target/classes/org/your/class/hierarchy/native/OS/ARCH

$ scp slave:~/your-java-lib/target/classes/org/your/class/hierarchy/native/OS/ARCH/lib.so target/classes/org/your/class/hierarchy/native/OS/ARCH/

# Create the jar packaging with maven. It will include the additional

# native libraries even though they were not built directly by this maven process.

$ mvn package

# When creating a single Jar for all platform native libraries,

# the release plugin's configuration must be modified to specify

# the plugin's `preparationGoals` to not include the clean goal.

# See http://maven.apache.org/maven-release/maven-release-plugin/prepare-mojo.html#preparationGoals

# For more details.

# To do a release to Maven Central and/or Sonatype Nexus:

$ mvn release:prepare

$ mvn release:perform

This is one way to do the JAR packaging with all the libraries in a single JAR. To build all the JARs, we recommend to build on native machines, but it can also be done via Docker using the buildx plug-in, or by cross-compiling inside your build-environment.

Additional options for releasing jars with native code is to: use a manager plugin such as the nar maven plugin

to manage each platform specific Jar. Release individual architecture specific jars, and then use the primary

instance to download these released jars and package them into a combined Jar with a final mvn release:perform.

An example of this methd can be found in the Leveldbjni-native pom.xml files.

Remove Anti-patterns

Anti-patterns can affect the performance on any instance family, but the level of impact can be different. Below is a list of anti-patterns we have found to be particularly impactful on Graviton:

- Excessive exceptions: Throwing exceptions and generating stack-traces

has been observed to cost up to 2x more on Graviton platforms compared to x86.

We recommend not to use Java exceptions as control flow, and to remove

exceptions when they appear in the hot-code path. Identifying hot exceptions can

be done using function profilers like Aperf,

Async-profiler, or Linux

perf. Overhead can be mitigated some by using the-XX:+OmitStackTraceInFastThrowJVM flag to allow the Java runtime to optimize the exception flow for some hot paths. The best solution is to avoid the exceptions as much as possible.

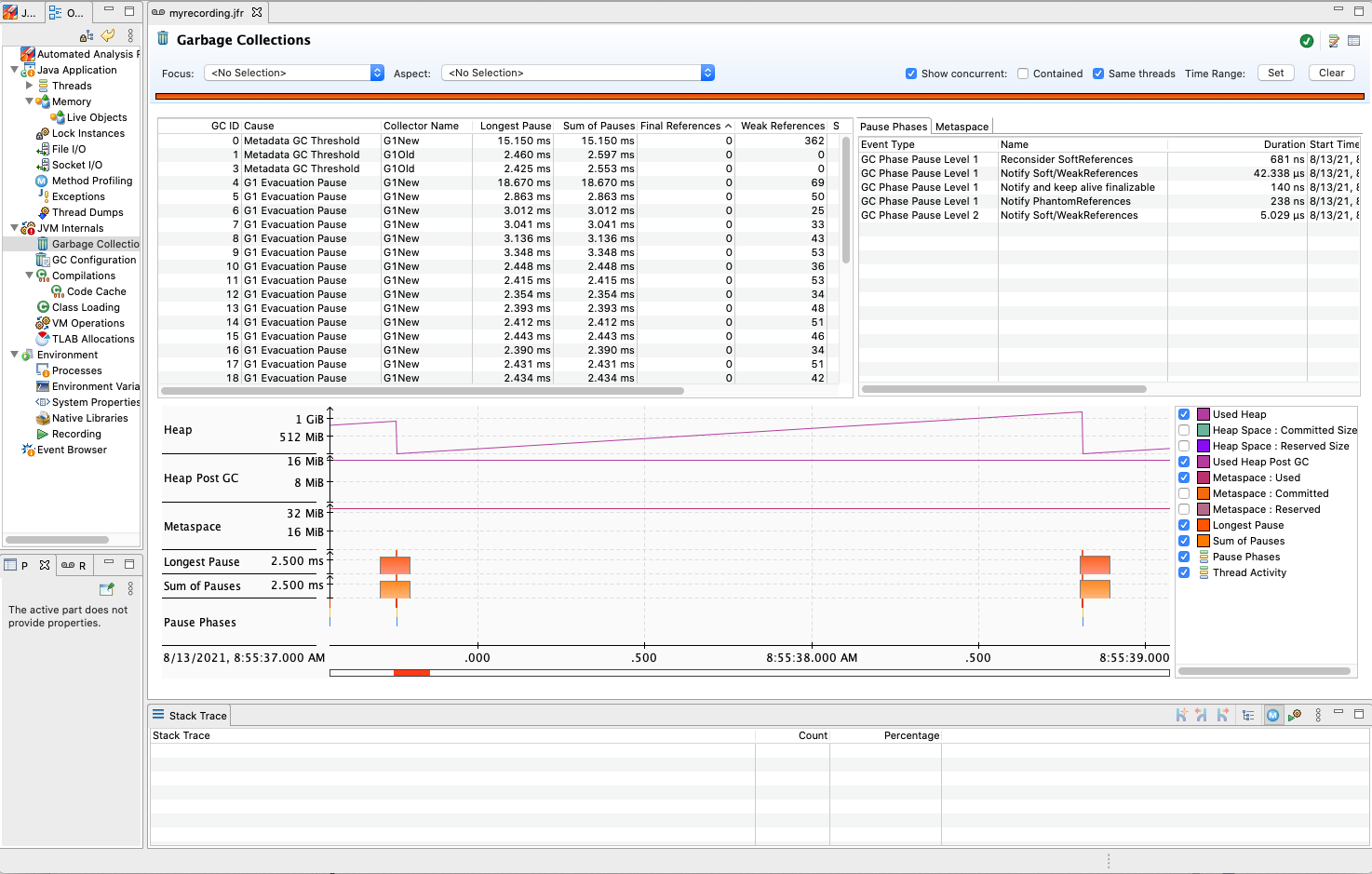

Profiling Java applications

APerf + Async-profiler

To profile Java we recommend using Aperf to gather profiles and view them via a static webpage. On your test system, follow the below directions to profile your Java code using APerf:

# Get latest APerf release onto the machine you are profiling.

# As of Oct 28, 2024 the latest release is v0.13.0.

wget https://github.com/aws/aperf/releases/download/v0.1.13-alpha/aperf-v0.1.13-alpha-aarch64.tar.gz

tar -zxf aperf-v0.1.13-alpha-aarch64.tar.gz

# Get the latest Async profiler

wget https://github.com/async-profiler/async-profiler/releases/download/v3.0/async-profiler-3.0-linux-arm64.tar.gz

tar -zxf async-profiler-3.0-linux-arm64.tar.gz

cd async-profiler-3.0-linux-arm64

sudo mkdir -p /opt/bin

sudo mkdir -p /opt/lib

sudo cp -a bin/* /opt/bin

sudo cp -a lib/* /opt/lib

export PATH=/opt/bin:$PATH

export LD_LIBRARY_PATH=/opt/lib:$LD_LIBRARY_PATH

sudo sysctl -w kernel.kptr_restrict=0

sudo sysctl -w kernel.perf_event_paranoid=-1

cd aperf-v0.1.13-alpha-aarch64

# While the application is running

./aperf record --profile --profile-java --period 300 -r java_record

./aperf report -r java_record -n java_report

# In java_report folder, open index.html in a browser to view report

Linux perf and libperf-jvmti.so

If prefering to use the standard Linux perf tool, we can capture information about symbols

on Java JIT'ed code leveraging the libperf-jvmti.so agent.

Follow the below steps to use the libperf-jvmti.so to dump symbols for

JITed code as the JVM runs.

# Compile your Java application with -g

# find where libperf-jvmti.so is on your distribution

# Run your java app with -agentpath:/path/to/libperf-jvmti.so added to the command line

# Launch perf record on the system

$ perf record -g -k 1 -a -o perf.data sleep 5

# Inject the generated methods information into the perf.data file

$ perf inject -j -i perf.data -o perf.data.jit

# View the perf report with symbol info

$ perf report -i perf.data.jit

# Process the new file, for instance via Brendan Gregg's Flamegraph tools

$ perf script -i perf.data.jit | ./FlameGraph/stackcollapse-perf.pl | ./FlameGraph/flamegraph.pl > ./flamegraph.svg

Build libperf-jvmti.so on Amazon Linux 2

Amazon Linux 2 does not package libperf-jvmti.so by default with the perf yum package for kernel versions <5.10.

Build the libperf-jvmti.so shared library using the following steps:

$ sudo amazon-linux-extras enable corretto8

$ sudo yum install -y java-1.8.0-amazon-corretto-devel

$ cd $HOME

$ sudo yumdownloader --source kernel

$ cat > .rpmmacros << __EOF__

%_topdir %(echo $HOME)/kernel-source

__EOF__

$ rpm -ivh ./kernel-*.amzn2.src.rpm

$ sudo yum-builddep kernel

$ cd kernel-source/SPECS

$ rpmbuild -bp kernel.spec

$ cd ../BUILD

$ cd kernel-*.amzn2

$ cd linux-*.amzn2.aarch64

$ cd tools/perf

$ make

Commonly used Jars that package native artifacts

| Org | jar | Builds on Arm | Arm Artifact available | Minimum Version |

|---|---|---|---|---|

| com.github.luben | zstd-jni | yes | yes | 1.2.0 |

| org.lz4 | lz4-java | yes | yes | 1.4.0 |

| org.xerial.snappy | snappy-java | yes | yes | 1.1.4 |

| org.rocksdb | rocksdbjni | yes | yes | 5.0.1 (7.4.3+ recommended) |

| com.github.jnr | jffi | yes | yes | 1.2.13 |

| org.apache.commons | commons-crypto | yes | yes | 1.1.0 |

| io.netty | netty-transport-native-epoll | yes | yes | 4.1.50 |

| io.netty | netty-tcnative | yes | yes | 2.0.31 |

| org.fusesource.jansi | jansi-native | yes | no | |

| org.fusesource.leveldbjni | leveldbjni-all | no | no | |

| org.fusesource.sigar | sigar | yes (refer https://github.com/hyperic/sigar/pull/140) | debian | 1.6.4 |

| org.apache.hadoop | hadoop-lzo | yes | no |

Updated on 2022-08-02

.NET on Graviton

.NET is an open-source platform for writing different types of applications. Software engineers can write .NET based applications in multiple languages such as C#, F#, and Visual Basic. .NET applications are compiled into Common Intermediate Language (CIL). When an application is executed, the Common Language Runtime (CLR) loads that application binary and uses a just-in-time (JIT) compiler to generate machine code for the architecture being executed on. For more information, please see what is .NET.

.NET Versions

| Version | Linux Arm32 | Linux Arm64 | Notes |

|---|---|---|---|

| .NET 9 | Yes | Yes | v9.0.0 released November 12, 2024 with Arm64 Linux builds. See also Arm64 vectorization in .NET libraries. |

| .NET 8 | Yes | Yes | v8.0.0 released November 14, 2023 with Arm64 Linux builds. See also Arm64 Performance Improvements in .NET 8. For details on .NET 8 and Graviton, check out this blog: Powering .NET 8 with AWS Graviton3: Benchmarks |

| .NET 7 | Yes | Yes | v7.0.0 released November 8, 2022 with Arm64 Linux builds. For more details check out this video: Boosting .NET application performance with Arm64 and AWS Graviton 3 Note that .NET 7 is out of support. |

| .NET 6 | Yes | Yes | V6.0.0 released November 8, 2021 with Arm64 Linux builds. For more details check out this blog: .NET 6 on AWS and video: AWS re:Invent 2021 - Accelerate .NET 6 performance with Arm64 on AWS Graviton2 |

| .NET 5 | Yes | Yes | Arm64-specific optimizations in the .NET libraries and the code produced by RyuJIT. Arm64 Performance in .NET 5. Note that .NET 5 is out of support. |

| .NET Framework 4.x | No | No | The original implementation of the .NET Framework does not support Linux hosts, and Windows hosts are not suported on Graviton. |

| .NET Core 3.1 | Yes | Yes | .NET Core 3.0 added support for Arm64 for Linux. Note that .NET Core 3.1 is out of support. |

| .NET Core 2.1 | Yes* | No | Initial support was for Arm32 was added to .NET Core 2.1. *Operating system support is limited, please see the official documentation. Note that .NET Core 2.1 is out of support. |

.NET 5

With .NET 5 Microsoft has made specific Arm64 architecture optimizations. These optimizations were made in the .NET libraries as well as in the machine code output by the JIT process.

- AWS DevOps Blog Build and Deploy .NET web applications to ARM-powered AWS Graviton 2 Amazon ECS Clusters using AWS CDK

- AWS Compute Blog Powering .NET 5 with AWS Graviton2: Benchmarks

- Microsoft .NET Blog ARM64 Performance in .NET 5

Building & Publishing for Linux Arm64

The .NET SDK supports choosing a Runtime Identifier (RID) used to target platforms where the applications run. These RIDs are used by .NET dependencies (NuGet packages) to represent platform-specific resources in NuGet packages. The following values are examples of RIDs: linux-arm64, linux-x64, ubuntu.14.04-x64, win7-x64, or osx.10.12-x64. For the NuGet packages with native dependencies, the RID designates on which platforms the package can be restored.

You can build and publish on any host operating system. As an example, you can develop on Windows and build locally to target Arm64, or you can use a CI server like Jenkins on Linux. The commands are the same.

dotnet build -r linux-arm64

dotnet publish -c Release -r linux-arm64

For more information about publishing .NET apps with the .NET CLI please see the offical documents.

Node.js on Graviton

Graviton is an excellent choice for running web applications with Node.js. There are a few considerations to be aware of to get the best performance.

Use Multiprocessing

Node.JS is fundamentally single threaded and so on an instance with more than one vCPU (which is most of them!), the node process will leave the CPU underutilized. There a few ways to improve this.

- Use a load balancer, such as Nginx, to balance incoming HTTP requests across multiple processes.

- Use a built in module,

clusterto balance the load across several forks of the node process.

The details of how to do this is beyond the scope of this document, but can be easily found with a few quick searches of the web.

Use Statically Linked Builds

If you download compiled binaries of the Node from Nodejs.org, you will have

statically linked binaries. Some package managers distribute Node as a thin

node binary which is dynamically linked to libnode.so where most of the code

lives. This is fine and allows other applications to link with libnode.so, but

it adds a small amount of extra overhead in each function call since each one

must use an extra step of indirection to load the destination function address.

This hardly matters at all until your application reaches a threshold volume of

incoming requests and it can no longer service all requests coming in. In a

dynamically linked node, this threshold will be lower. This is true on on all

EC2 instance types; it is not unique to Graviton.

Applications Using Many and Complex Regular Expressions

A shortcoming in the just in time compiler in V8 for aarch64 creates a long link

chain of veeneers when evaluating complex regular expressions. A new version of

V8 addresses this, but it has not yet been merged into NodeJS main. If your

application relies heavily on regular expression performance AND you find that

the performance is lower on Graviton, try adding --regexp-interpret-all to

the node arguments to force V8 to interpret rather than compile regular

expressions.

PHP on Graviton

PHP is a general-purpose scripting language geared towards web development. PHP scripts are executed by an interpreter implemented as a plug-in module in web servers, a separate daemon (php-fpm) or a CGI executable (php-cgi).

PHP 7.4 and later are tested to perform well on Graviton. It works out of the box on Ubuntu 22.04 and AL2023, but requires extra steps on AL2.

OPcache on Amazon Linux 2 (AL2)

OPcache improves PHP performance by storing precompiled script bytecode in shared memory, thereby removing the need for PHP to load and parse scripts on each request. Installing it can significantly improve execution time on most workloads. More information about OPcache available in the PHP Manual.

OPcache is installed by default on Amazon Linux 2023 (AL2023) and later, but not yet available in Amazon Linux 2 (AL2). See PHP OPcache Installation on AL2 for manual build and install instructions.

PHP OPcache Installation on Amazon Linux 2 (AL2)

Install PHP

First run sudo amazon-linux-extras install -y php8.0 to install PHP 8 from AL2 extras, if not already installed.

Sanity Check

Verify that OPcache is not already present after installation; stop here if so.

Run the following commands to see if "opcache.so" is present and enabled in php.ini.

php --version prints a "with Zend OPcache" line on successful load.

$ file /usr/lib64/php/modules/opcache.so

/usr/lib64/php/modules/opcache.so: ELF 64-bit LSB shared object <-- already installed

$ php --version

PHP 8.0.30 (cli) (built: Aug 24 2023 20:32:36) ( NTS )

Copyright (c) The PHP Group

Zend Engine v4.0.30, Copyright (c) Zend Technologies

with Zend OPcache v8.0.30, Copyright (c), by Zend Technologies <-- already enabled

Install Dependencies

Install PHP dependencies required to build OPcache. This is ideally done by running sudo yum-builddep php,

which fails in some configurations due to packaging conflict requiring both libzip010-compat-devel and libzip-devel.

Run the following as a workaround:

sudo yum install apr apr-devel apr-util apr-util-bdb apr-util-devel aspell aspell-devel autoconf automake bzip2-devel cpp cyrus-sasl cyrus-sasl-devel elfutils-devel elfutils-libelf-devel enchant enchant-devel expat-devel freetype-devel gcc gcc-c++ gdbm-devel generic-logos-httpd glib2-devel gmp-devel httpd httpd-devel httpd-filesystem httpd-tools libacl-devel libatomic libattr-devel libcurl-devel libdb-devel libedit-devel libgcrypt-devel libgpg-error-devel libICE libicu-devel libitm libjpeg-turbo-devel libmpc libpng-devel libsanitizer libSM libsodium libsodium-devel libtool libtool-ltdl libtool-ltdl-devel libwebp-devel libX11 libX11-common libX11-devel libXau libXau-devel libxcb libxcb-devel libXext libxml2-devel libXpm libXpm-devel libxslt libxslt-devel libXt libzip-devel lm_sensors-devel m4 mailcap mod_http2 mpfr ncurses-c++-libs ncurses-devel net-snmp net-snmp-agent-libs net-snmp-devel net-snmp-libs oniguruma oniguruma-devel openldap-devel pam-devel perl-devel perl-ExtUtils-Install perl-ExtUtils-MakeMaker perl-ExtUtils-Manifest perl-ExtUtils-ParseXS perl-Test-Harness popt-devel postgresql postgresql-devel pyparsing recode recode-devel rpm-devel sqlite-devel systemd-devel systemtap-sdt-devel t1lib t1lib-devel tcp_wrappers-devel tokyocabinet tokyocabinet-devel unixODBC unixODBC-devel xorg-x11-proto-devel xz-devel

Build Source RPM

cd ~

yumdownloader --source php

rpm -ivh ./php-8.0.30-1.amzn2.src.rpm

sudo yum-builddep php

cd ./rpmbuild/SPECS

rpmbuild -ba php.spec

Install OPcache

cd ~/rpmbuild/BUILD

sudo cp ./php-8.0.30/build-cgi/modules/opcache.so /usr/lib64/php/modules/opcache.so

sudo cp ./php-8.0.30/10-opcache.ini /etc/php.d/10-opcache.ini

Verify installation by running php --version. Output show now look similar to above examples.

Reboot your instance or restart php-fpm and your http server to use OPcache.

Python on Graviton

Python is an interpreted, high-level, general-purpose programming language, with interpreters available for many operating systems and architectures, including arm64. Read more on Wikipedia

1. Installing Python packages

When pip (the standard package installer for Python) is used, it pulls the packages from Python Package Index and other indexes. To ensure you can install binary packages from Python Package Index, make sure to update your pip installation to a new enough version (>19.3).

# To ensure an up-to-date pip version

sudo python3 -m pip install --upgrade pip

AWS is actively working to make pre-compiled packages available for Graviton. You can see a current list of the over 200 popular python packages we track nightly for AL2 and Ubuntu for Graviton support status at our Python wheel tester.

In the case pip could not find a pre-compiled package, it automatically downloads, compiles, and builds the package from source code.

Normally it may take a few more minutes to install the package from source code than from pre-built. For some large packages,

it may take up to 20 minutes. In some cases, compilation may fail due to missing dependencies. Before trying to build a python package from source, try

python3 -m pip install --prefer-binary <package> to attempt to install a wheel that is not the latest version. Sometimes automated package builders

will push a release without all the wheels due to failures during a build that will be corrected at a later date. If this is not an option, follow

the following instructions to build a python package from source.

1.1 Prerequisites for installing Python packages from source

For installing common Python packages from source code, we need to install the following development tools:

On AmazonLinux2 or RedHat:

sudo yum install "@Development tools" python3-pip python3-devel blas-devel gcc-gfortran lapack-devel

python3 -m pip install --user --upgrade pip

On Debian/Ubuntu:

sudo apt update

sudo apt-get install build-essential python3-pip python3-dev libblas-dev gfortran liblapack-dev

python3 -m pip install --user --upgrade pip

On all distributions, additional compile time dependencies might be needed depending on the Python modules you are trying to install.

1.2 Recommended versions

When adopting Graviton, it is recommended to use recent software versions as much as possible, and Python is no exception.